Violet Forest // ⍣٭⋆⋆⍣

⋆⋰⋆⍣٭⍣ scroll → ٭⋆⍣⋆⋰

⋆⋰⋆⍣٭⍣ scroll → ٭⋆⍣⋆⋰

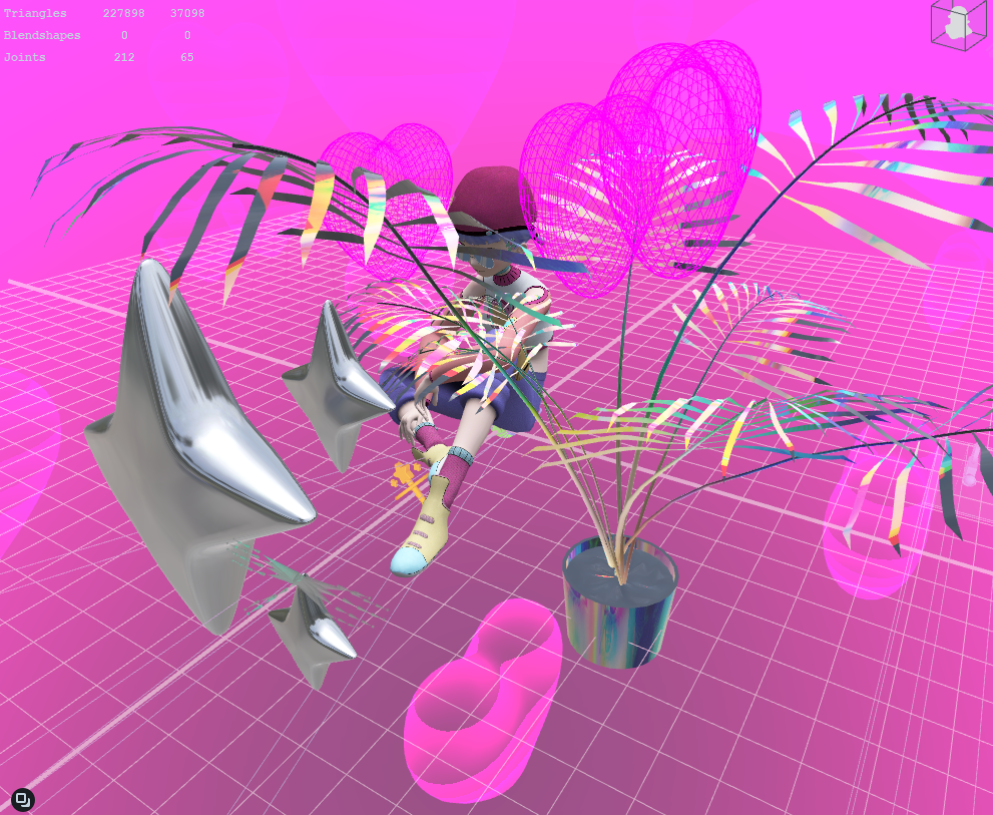

As a Technical Artist for Horizon Worlds, I collaborated with engineers to facilitate building Meta's own proprietary game engine. This includes game asset ingestion for animaton, VFX, USD, materials, and scripts. Programs I have been working with include Maya, Blender, PopcornFX, and Perforce. I also made a Mixed Reality prototype for the internal hackathon.

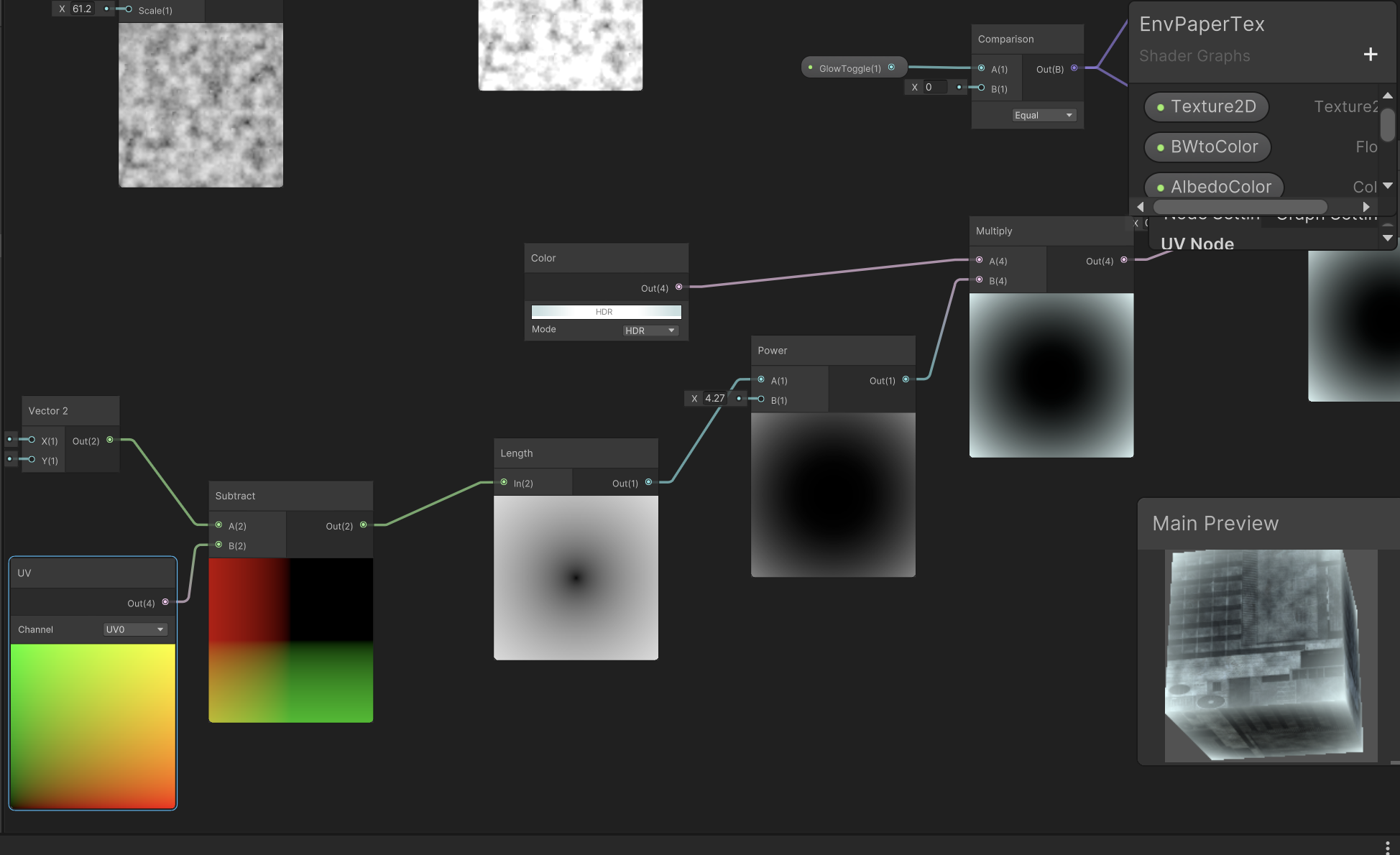

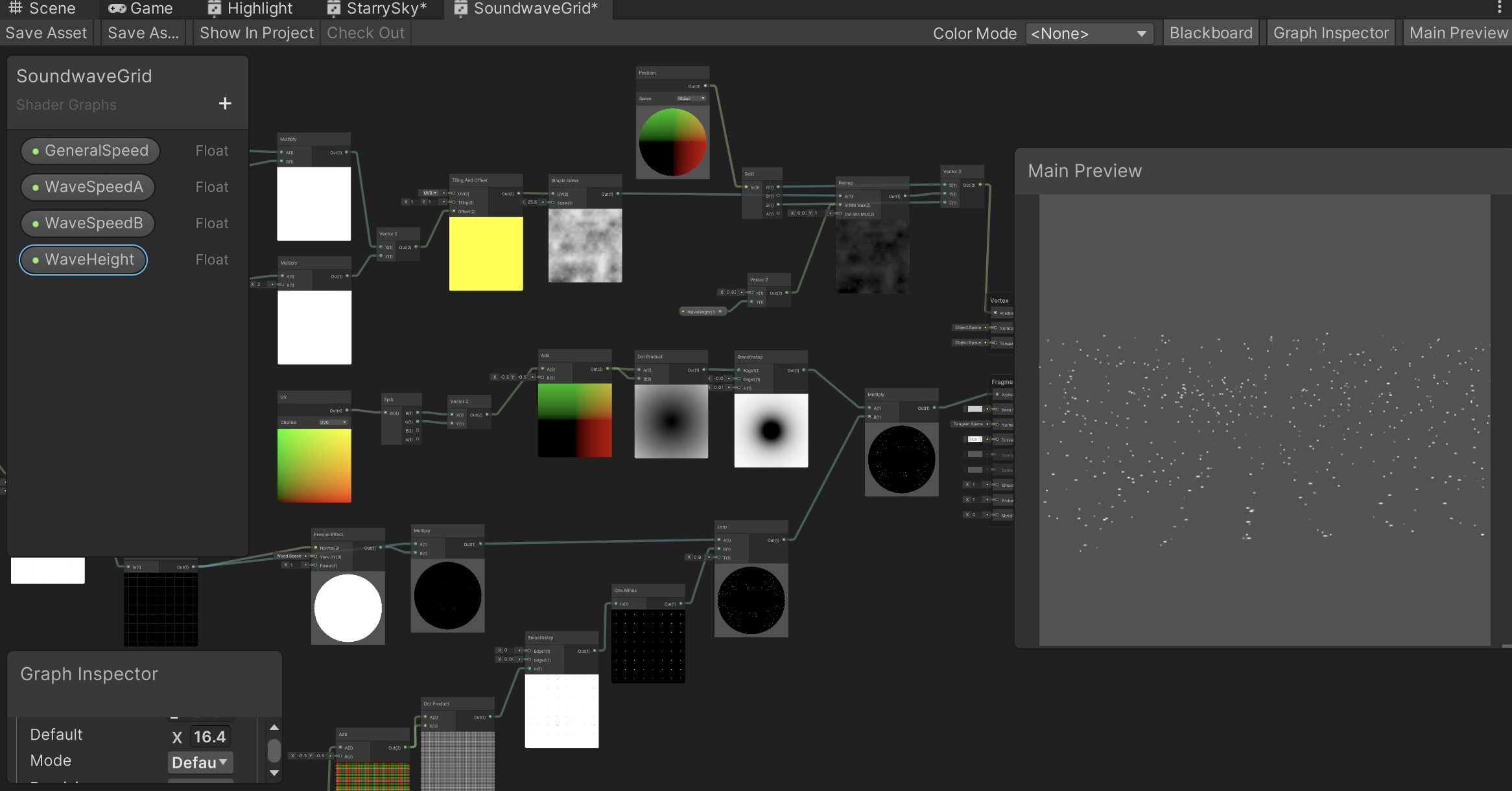

Interactive shaders with shadergraph and scene lighting in Unity. Prototyping with Niantic Lightship.

A Unity game for WebVR and Quest 2/3. I built the game with Unity and integrated it into the website. Game mechanics included picking up objects with raycast, and incorporated a timer that ran out after the house was flooded. I also integrated the assets created by the artist and producer Michelle Brown, and was given creative control over the water shader.

In partnership with Spectacles, I was commissioned to create a Lens for Snap's Augmented Reality glasses. The Lens was released for the Spectacles and for the Snapchat app. I was given full creative control over the project, where I created a sidequest where you could talk to an NPC in the world and be given a quest to gather items using handtracking, and in return of completing the quest you are rewarded with a magic power. The concept was that when AR glasses become so available and uniquitious in the future, there could be an MMORPG-like game where there are NPCs and characters placed in the world that you could interact with throughout your day to level up things like power, armor, weapons, and other attributes.

Glitter Hearts, the latest Lens Project by @violet_forest, puts the user in a sweet AR wonderland. Reach out and collect hearts, spread glitter (and joy), and see the world made even brighter thanks to Violet's imagination brought to life. pic.twitter.com/bbh4Tjv32e

— Spectacles (@Spectacles) January 4, 2022

AR creator @violet_forest is being the change she hopes to see in the world, creating for Spectacles as a way to make the ordinary a little more extraordinary. pic.twitter.com/luTpWhqccG

— Spectacles (@Spectacles) January 5, 2022

Augmented Reality

Spark AR

Virtual Reality

Quest

Hand Tracking

Parenting particle systems to the joints of the fingers. Made with WebXR + Unity.

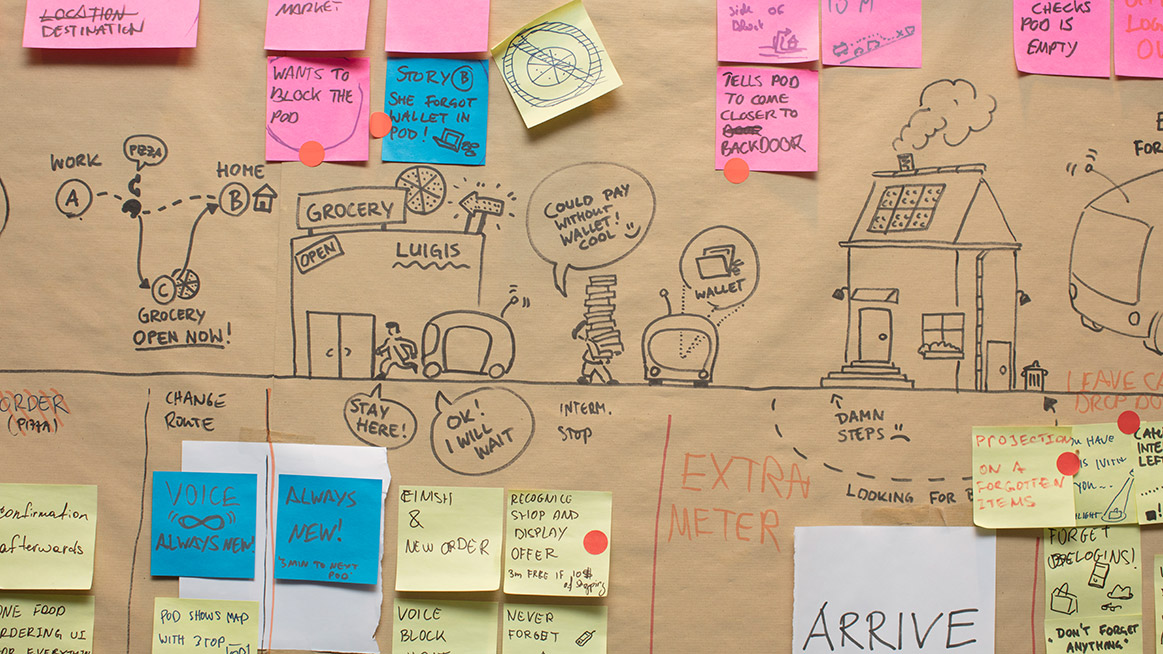

I worked as a full-time employee at Volkswagen Future Center Europe in Berlin, Germany. I collaborated with UX designers and technologists to conceptualize and rapid prototype UX solutions for Level 3-5 self-driving vehicles in private and car-pooling situations.

The following includes some rapid prototyping projects I have worked on:

We also collaborated with Porsche on a mixed-reality experience.

I was hired to make a mobile app that can detect the shape of a heart candy & place augmented reality on top of it. This research had led me to experiment with openCVforUnity contour detection, color detection, and training a haar cascade for object detection. In the end I settled on using openCVforUnity contour detection to trigger ARfoundation’s pointcloud feature detection.

My research in computer vision has sparked my interest in machine learning and computer vision in general, and I am continuing my research with things like Google’s new open-source Mediapipe for handtracking, where I collaborated on building an app to control open-source prosthetics with a mobile app.